2 Student approximation when σ value is unknown.

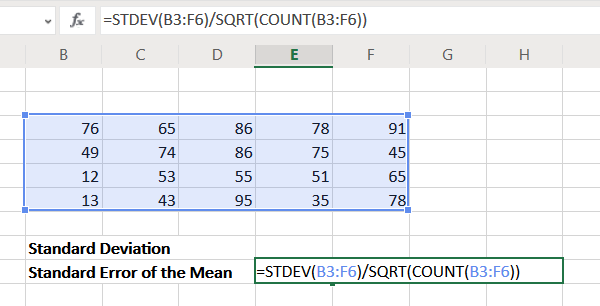

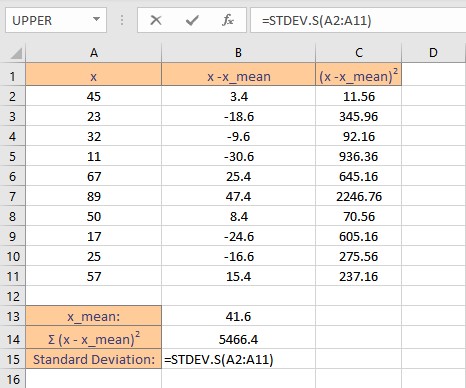

1.4 Independent and identically distributed random variables with random sample size.In regression analysis, the term "standard error" refers either to the square root of the reduced chi-squared statistic, or the standard error for a particular regression coefficient (as used in, say, confidence intervals). forecaster Tom didn't say stderr calculates the standard error, he was warning that this name is used in base, and John originally named his function stderr (check the edit history.). In other words, the standard error of the mean is a measure of the dispersion of sample means around the population mean. Therefore, the relationship between the standard error of the mean and the standard deviation is such that, for a given sample size, the standard error of the mean equals the standard deviation divided by the square root of the sample size. This is because as the sample size increases, sample means cluster more closely around the population mean. Mathematically, the variance of the sampling distribution obtained is equal to the variance of the population divided by the sample size. This forms a distribution of different means, and this distribution has its own mean and variance. SEM is calculated by taking the standard deviation and dividing it by the square root of the sample size. The sampling distribution of a mean is generated by repeated sampling from the same population and recording of the sample means obtained. If the statistic is the sample mean, it is called the standard error of the mean ( SEM). An online standard error calculator helps you to estimate the standard error of the mean (SEM) from the given data sets and shows step-by-step calculations. The standard error ( SE) of a statistic (usually an estimate of a parameter) is the standard deviation of its sampling distribution or an estimate of that standard deviation.

This clearly proves that increasing the sample size reduces the SE of the sample mean.For a value that is sampled with an unbiased normally distributed error, the above depicts the proportion of samples that would fall between 0, 1, 2, and 3 standard deviations above and below the actual value. Where S is the sample standard deviation and \(S^2 =\cfrac $$ In such a case, the following formula is used to estimate the standard error of the sample mean, also denoted as S x: Repeating this calculation 5000 times, we found the standard deviation of their 5000 medians (0.40645) was 1.25404 times the standard deviation of their means. SEM SD/N Where ‘SD’ is the standard deviation and N is the number of observations. However, the population standard deviation, σ, is usually unknown. For example, using R, it is simple enough to calculate the mean and median of 1000 observations selected at random from a normal population ( x 0.1 & x 10). Formula The formula for standard error of the mean is equal to the ratio of the standard deviation to the root of sample size. Provided the population standard deviation, σ, is known, analysts use the following formula to estimate the standard error of the sample mean, denoted as σ x: Standard Error of the Sample Mean Formula While the standard deviation measures the variability obtained within one sample, the standard error gives an estimate of the variability between many samples. It gives analysts an estimate of the variability they would expect if they were to draw multiple samples from the same population. The standard error (SE) of the sample mean refers to the standard deviation of the distribution of the sample means.

0 kommentar(er)

0 kommentar(er)